Humans sometimes masquerading as AI.

An AI chatbot is not your therapist, and not your lover, and inviting chatbots to private meetings isn't a good idea especially if they turn out to be some person somewhere.

This story, by and about a data worker in Nairobi is jarring for a number of reasons.

It also feels like everyone’s being gaslit.

Asia, M. G. (2025). The Quiet Cost of Emotional Labor. In: M. Miceli, A. Dinika, K. Kauffman, C. Salim Wagner, and L. Sachenbacher (eds.). Data Workers‘ Inquiry. Creative Commons BY 4.0. https://data-workers.org/michael/

Chat moderators are hired by companies such as Texting Factory, Cloudworkers, and New Media Services to impersonate fabricated identities, often romantic or sexual, and chat with paying users who believe they’re forming genuine connections. The goal is to keep users engaged, meet message quotas, and never reveal who you really are. It’s work that demands constant emotional performance: pretending to be someone you’re not, feeling what you don’t feel, and expressing affection you don’t mean.Over time, I began to suspect that I wasn’t just chatting with lonely users. I was also helping to train AI companions, systems designed to simulate love, empathy, and intimacy. Many of us believed we were simultaneously impersonating chatbots and teaching them how to replace us. Every joke, confession, and “I love you” became data to refine the next generation of conversational AI.

So people think they’re talking to an “AI” and sometimes a human is actually engaging with them? That’s unnverving.

I think we have to assume that an AI chatbot is just as likely being remote controlled at least some portion of the time by some human worker somewhere being underpaid, or maybe even trafficked. We already know that these chatlogs are sometimes reviewed by humans. The security issues we already knew about were bad enough like prompt injection attacks, and chatbot chatlogs becoming public by mistake or via lawsuits, since data re-identification is a thing even if released data is anonymized. And a lot of people don’t even know that OpenAI was court ordered to retain chatlogs even for chatbot conversations users thought they were deleting, until finally there was an order that they could start actually deleting deleted logs going forward (but still retaining the ones that had been retained). And that’s even besides people seemingly hooked on chatbot tools, with people too often trusting potentially insecure “vibe coding” littered with command injections or secret leaks, or just people leaning on LLMs a bit like compulsive gamblers.

I already thought it was a problem that so-called self-driving cars are taken over remotely by people, sometimes maybe in other countries, maybe who don’t even have a drivers license at all, never mind in the state they’re driving these cars in remotely. I already have said that AI therapy can’t exist because therapy is about a relationship with a trained and certified therapist ensuring a professional human relationship with clients. And one wonders if they’re just paying someone in some other country, without any education or mental health training, to pretend to be chatbot therapists as well as AI companions.

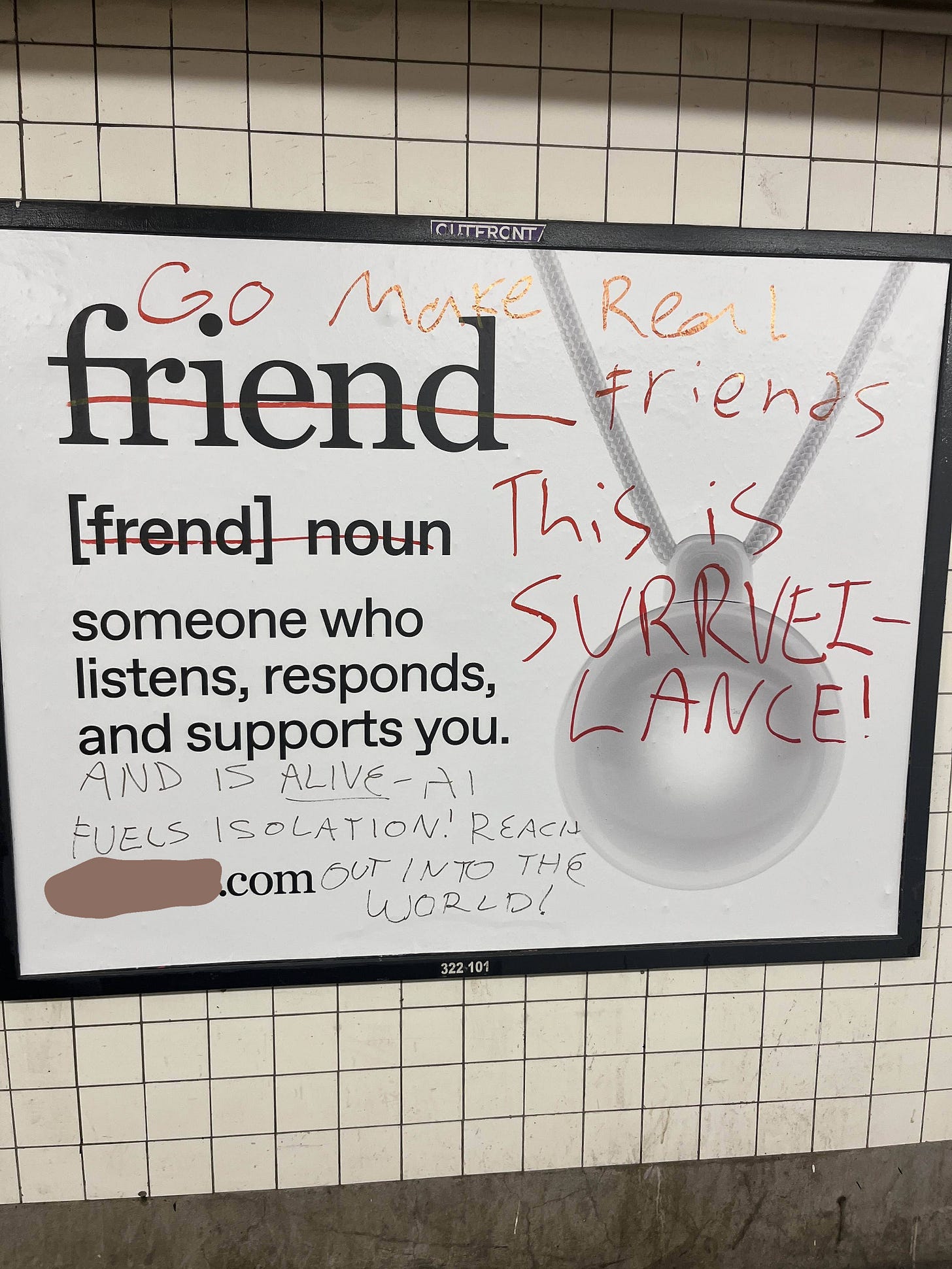

Images is a photo of a poster on a New York City subway wall that’s advertising an AI companion service and it has like as if a dictionary definition: “friend, noun, someone who listens, responds and supports you.” And the poster is vandalized with things written in marker, adding to the definition it says: “And is alive. AI fuels isolation reach out into the world!” and written larger is: “Go make real friends, this is surveillance.” (photo credit: someone called Emma in the Ed Zitron discord, used with permission.) (Someone else’s photos: tumblr)

Then I start feeling even more suspicious and wonder if some of the AI chatbots that have been convincing people to do harm to themselves, and others or convincing elderly dementia patients to meet somewhere, are maybe just prisoners trapped in scam centers, being tortured, and going off the rails. Humans trafficked to places like the scam centers in Myanmar have been forced into sexting people for pig butchering schemes used on people in the US, Europe, and Australia to scam them out of money. So who knows? It’s also made me start wondering if there’s more to the story than just the oracle of chatbot phenomenon, where people use chatbots almost like a magic 8 ball or I Ching. Maybe there’s more going on behind the effects Jacob Ward is calling: “AI distortion”, referring to all the strange psychological ramifications around stuff called AI. I started to speculate that, what if people can sense, on some level, on some unconscious level, when ‘there’s a human on the other end’, so to speak. And that has been sparking some people to have these “I’ve awakened my chatbot” ideas, as if they have found an aware being within the machine. Maybe those “awakening” moments are perceived in the moments a real human is there, and that would be pretty crazymaking and disorienting if you think about it. I haven’t used chatbots since my initial experience trying it out and finding it problematic and prone to getting things wrong. But much of the documentation I’ve seen has been disturbing. I have to wonder if people are just being gaslit with these “machines” while someone on the other end really is trapped somewhere being forced into impersonating a bot, at least some of the time. How would we even know one way or another? Until more people start spilling the beans. That’s assuming most are not forced into silence by NDAs.

I really hope it’s just that the many revelations of trickery and obfuscation, including elected officials in government hiding secrets about data centers with NDAs (nondisclosure agreements), has just led me to become suspicious of everything involving AI. But I do think there should be a thorough independent investigation into this whole industry, given all the stories that have already come out. It’s not a maybe, there are so many instances of sketch. It’s no wonder the insurance companies don’t want to cover liability for AI stuff. And while we’re at it, it also seems like this is also a huge proposed energy expenditure for what seems to be amounting to just another iteration of outsourcing and offshoring. And is it even all for the purposes they claim? I realize I’m “just asking questions” and maybe these questions are highly speculative and even alarmist, or sound paranoid, but unfortunately so often when I’ve been suspicious, I’ve been invariably right to be suspicious, even if it turns out not for exactly the reasons I thought, there’s always a there there. And this whole industry stinks with bizarrely suspicious stuff going on, from the Flock camera surveillance state to the Network State.

I had a dream of soylent chatbots made out of people.

I had a dream that news broke about AI companies and it came out that all the chatbot companies were in fact just employing a bunch of people to do the chatbot generated content like a mechanical turk hoax. And that explained all the lying and making stuff up,

PC GAMER - $1 billion AI company co-founder admits that its $100 a month transcription service was originally ‘two guys surviving on pizza’ and typing out notes by hand News By Andy Edser published November 14, 2025 “We charged $100 a month for an AI that was really just two guys surviving on pizza,” Firefly co-founder Sam Udotong proudly declared in a LinkedIn post earlier this week (via Futurism). “We told our customers there’s an ‘AI that’ll join a meeting’,” said Udotong. “In reality it was just me and my co-founder calling in to the meeting sitting there silently and taking notes by hand.”

FORTUNE - A tech CEO has been charged with fraud for saying his e-commerce startup was powered by AI, when it was actually just using manual human labor By Chris Morris Former Contributing Writer April 11, 2025, 11:01 AM ET Startup tech company Nate promised consumers easier shopping with the help of artificial intelligence. But the Justice Department says there was no miracle tech behind the checkout app’s transactions. Instead, they were handled by humans in the Philippines and Romania.

The New York Times - How Self-Driving Cars Get Help From Humans Hundreds of Miles Away By Cade Metz, Jason Henry, Ben Laffin, Rebecca Lieberman and Yiwen Lu Sept. 3, 2024 (internet archive link) Inside companies like Zoox, this kind of human assistance is taken for granted. Outside such companies, few realize that autonomous vehicles are not completely autonomous. For years, companies avoided mentioning the remote assistance provided to their self-driving cars. The illusion of complete autonomy helped to draw attention to their technology and encourage venture capitalists to invest the billions of dollars needed to build increasingly effective autonomous vehicles. “There is a ‘Wizard of Oz’ flavor to this,” said Gary Marcus, an entrepreneur and a professor emeritus of psychology and neural science at New York University who specializes in A.I. and autonomous machines.

Amazon’s Just Walk Out technology relies on hundreds of workers in India watching you shop By Alex Bitter Apr 3, 2024, 1:10 PM ET Business Insider Amazon’s Just Walk Out technology had a secret ingredient: Roughly 1,000 workers in India who review what you pick up, set down, and walk out of its stores with. The company touted the technology, which allowed customers to bypass traditional checkouts, as an achievement powered entirely by computer vision. But Just Walk Out was still very reliant on humans, The Information reported on Tuesday, citing an unnamed person who has worked on Just Walk Out technology.

Reuters - OpenAI loses fight to keep ChatGPT logs secret in copyright case By Blake Brittain December 3, 2025 1:49 PM EST Updated December 3, 2025 OpenAI must produce millions of anonymized chat logs from ChatGPT users in its high-stakes copyright dispute with the New York Times (NYT.N), and other news outlets, a federal judge in Manhattan ruled. U.S. Magistrate Judge Ona Wang in a decision made public on Wednesday said that the 20 million logs were relevant to the outlets’ claims and that handing them over would not risk violating users’ privacy.

Asia, M. G. (2025). The Quiet Cost of Emotional Labor. In: M. Miceli, A. Dinika, K. Kauffman, C. Salim Wagner, and L. Sachenbacher (eds.). Data Workers‘ Inquiry. Creative Commons BY 4.0. https://data-workers.org/michael/ Chat moderators are hired by companies such as Texting Factory, Cloudworkers, and New Media Services to impersonate fabricated identities, often romantic or sexual, and chat with paying users who believe they’re forming genuine connections. The goal is to keep users engaged, meet message quotas, and never reveal who you really are.

I was going to include a letter to my reps, but then I thought I’m just going to write about all this to my reps, or just send a print, what the hell. Remember, elected officials are sometimes getting nothing but industry PR bs from lobbyists, or misinformation from their own echo chambers. We know this, and constituents need to bust in, clue them in, and demand answers. Otherwise they may believe that the affordability crisis really is just a hoax, or that cryptocurrency isn’t horrendous.

From Abigail Spanberger, former CIA, former member of Congress from Virginia, and now Governor-elect of Virginia, speaking on the podcast SpyTalk, Sept 27, 2024:

“One of the important things is to endeavour to understand what might be some of the influencing factors. And I had a really interesting conversation with a Democratic colleague of mine the other day who was talking and reflecting on one of the more right-wing MAGA people who’s in the news frequently. He was talking to this person in a casual setting on Capitol Hill. They just bumped into each other outside of votes, outside of committee meetings and struck up a conversation. And this person asked him about a particular issue that this person has read about in the news. And my Democratic colleague said “I literally have no idea what you’re talking about. What is this aspect of the news?” And the individual shows my colleague their phone, and starts scrolling through and just says, as I would call it, a conspiracy, not a news source, kind of accusation of something that’s occurring. And my colleague said to this other lawmaker, “These aren’t news sources.” And he reported back to me, and sort of reflecting on this conversation, that it was incredibly informative for him to have this conversation, where it was so clear to him in the conversation, that some of the things that he had long thought was performative, or truly just ideological rhetoric, is also grounded in some of the misinformation that this person is perceiving through social media.”

Turns out, sometimes politicians are humans too. /s