Cats in Wonderland - the Uncanny Valley of lying AIs

It’s just a huge coincidence that AI chatbot services are very much like a lot of other tech products with problematic tradeoffs and just happen to be useful to a lot of the same questionable actors.

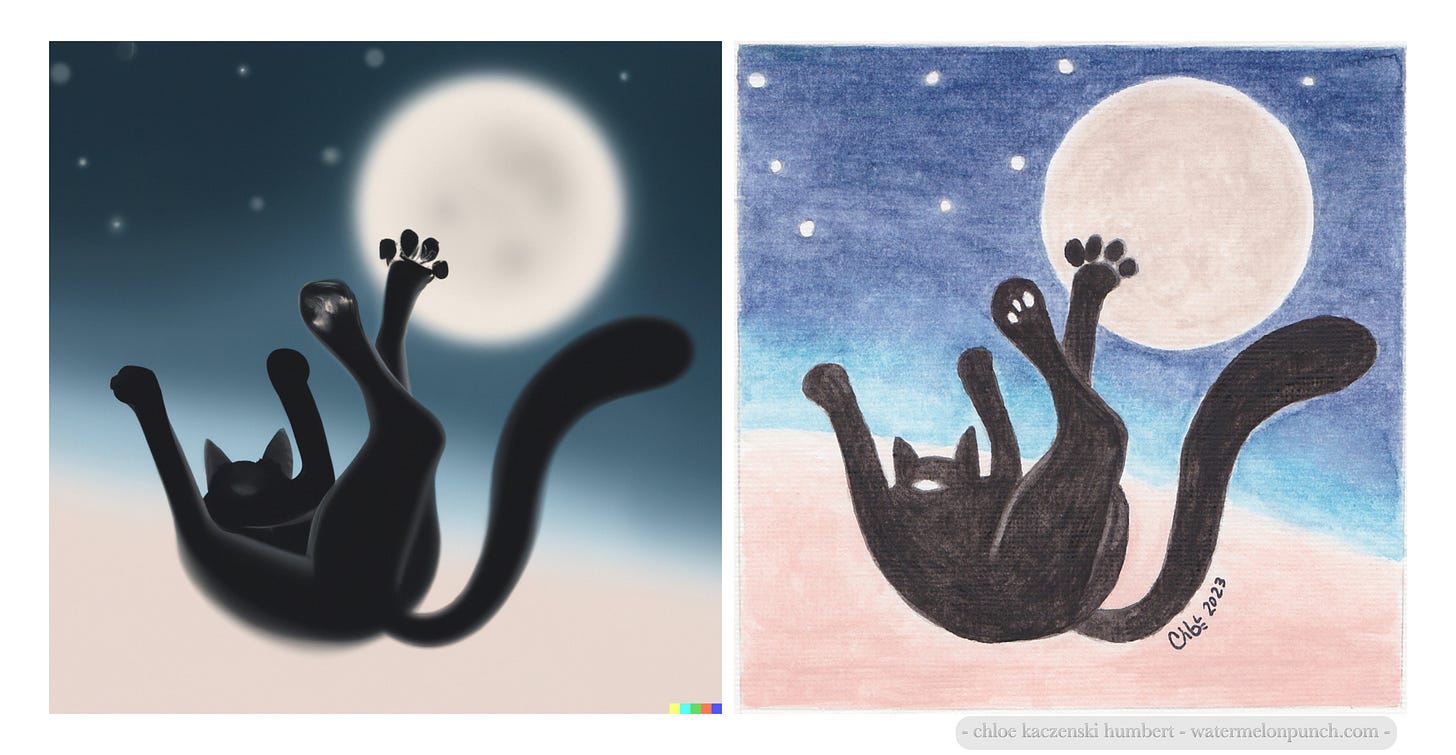

The first thing I did with chatGPT is see if it could generate MORE INTERNET CATS. Because of course I did.

There was a distinct uncanny valley quality to the cats generated by ChatGPT. Things are not quite right. Surreal, whimsical, and magical? Those things weren’t really nailed down either.

Was all that even necessary? It is a chatbot I guess, with CHAT in the name. But I wasn’t expecting Chatty Cathy GPT.

HALLUCINATION IS A PR EUPHEMISM FOR MAKING STUFF UP

One problem is that these AI chatbots make stuff up. It’s being called “hallucinating” for some reason, but it really comes down to making stuff up.1 An ethicist in a Bloomberg piece pointed out, “the word ‘hallucination’ hides something almost mystical, like mirages in the desert, and does not necessarily have a negative meaning as ‘mistake’ might.” So this choice of words is probably PR spin, as was described in the 2002 documentary Toxic Sludge is Good for You, when something goes wrong for a business, “often its first move is not to deal with the actual problem, but to manage the negative perception caused by that problem.”2

A memorable quote from The Hitchhiker’s Guide to The Galaxy trilogy, is that of Marvin the Paranoid Android, in the second book of the trilogy, The Restaurant at the End of the Universe, upon being accused of making stuff up, Marvin responds by saying, “Why should I want to make anything up? Life's bad enough as it is without wanting to invent any more of it.”3 There are reports that chatbots get citations completely wrong, and that these made up citations nevertheless sound very plausible.4 So today’s AI chatbots are not like the self-questioning and brooding Marvin the Paranoid Android at all. Quite the opposite. ChatGPT appears confident and gives prolific convincing made-up output with seeming bravado. Even the OpenAI CEO acknowledged their tool’s ability to generate false information that is persuasive.5

ChatGPT getting stuff wrong didn’t surprise me based on my quizzing the chatbot on song lyrics from the 1990s. Looking up song lyrics should be as easy as a simple internet search. But the AI doesn’t even seem to know how to Google properly, because somehow ChatGPT managed to get simple 30 year old lyrics wrong, repeatedly, and strangely also with a lot of wordiness. That doesn’t bode well for people who’ve been switching to ChatGPT instead of Google for a search engine, in some cases for recipe ideas.6

The song lyrics I used as a prompt were from the song “Fade into You” by Mazzy Star, but ChatGPT guessed The Cure. The AI eventually did figure it out, but only after I was insistent in pushing back. I resent the way it felt like it was trying to convince me. It felt like an argument. I don’t like the way ChatGPT apologized either. It seemed insincere. It actually started to seem disingenuously obsequious. But also somehow pushy and domineering, and I imagine that this persuasive ability in the programming would appear to be related.

AI CON ARTISTRY - A BUG, OR A FEATURE FOR BAD ACTORS

A technique of con artists is to sound confident - the “con” being short for the word confidence. Another technique for tricking people with deception is to start with something that’s self-evident or something all parties can agree upon, and then add in other assertions or lies. A truth with a lie chaser.

Infosec professionals were already concerned about the flooding of the zone - the ease at which disinformation can be truly prolific if you just have a computer output loads of it. The sheer volume of convincing misinformation an AI can produce is a real concern since you don’t need a particularly smart AI to do it because disinformation isn’t typically meant to convince anybody of anything, it’s mainly used to sow distrust and confusion - to drive people out of an information exchange or political activities,7 disrupt people to act erroneously, or even to cause inaction.8 Driving people who might act into informational learned helplessness9 is a great way to neutralize opposition by blinding them to good information, options, or threats, a pitfall since the earliest recorded battles.10

People looking to produce reliable output are not really going to be readily spending money on a service that needs to be endlessly fact-checked. But an AI that’s good at producing persuasive false information is going to be a service that’s very attractive to the people pushing disinfo campaigns. There’s plenty of money being splashed around to wage cognitive warfare globally.11 So who will these AI companies wind up catering to? We know social media companies are doing lucrative business with disinformation campaigns.12

GAMIFICATION - THE CHATBOT SLOTS

There is a gamified aspect to ChatGPT… and DALL-E and other AI image generators. I picked up on it because it reminded me of Diablo Lords of Destruction, a game that came out over 20 years ago. There were recipes in the game to “re-roll” special objects and weapons using collected gems and runes, on the chance of making something much better than you already had retrieved in drops from battles in the game, which was also casino-like. I spent hours playing that game, and plenty using “recipes” to re-roll this and that. I mostly used recipe guides I found in gaming forums, but many people spent hours trying to find these recipes in the first place by trial and error. I was reminded of that when I saw a Medium blog post where the author said they used ChatGPT every day for 5 months and that’s what it took to find the “hidden gems” ChatGPT has to offer, and now they’re offering to share these “life changing” things they learned - behind the paywall of course.13 I can’t help but notice the people who praise and hype ChatGPT are using it an awful lot.

I realized fairly quickly that in many cases with the chatbot, I could probably write something better in less time than I’d spend on inputting information, doing the right prompts, re-rolling, and then tweaking, editing, and fact checking the output of a chatbot. And though the image generators seem to have less pitfalls, and can generate a rough image quicker than I could, it’s still frustrating to get a specific image out of the AI image generators without winding up with some weird artifact or a glaring problem. But it is like rolling the dice, and possibly even addictive. Though often fruitless, even if it is sometimes funny.

And it’s often funny, and fun, and useful in specific ways. But I just started feeling like it was a big time sink, and I actually have games that I find more fun, like Gran Turismo 7, and I’d rather spend my time playing that. But the insidious lure is that the AI will make work more productive, and save time for you to spend on those things you’d rather be doing. It’s not technically a game. I have heard it’s useful for help writing code. But I’ve not even seen it primarily marketed for that, and I’m not seeing a lot of hype about code writing either. The “hallucinated” mistakes need rigorous fact-checking, one must engage critical thinking a lot when reviewing the output, and considering the impulse to re-roll, I think in many cases it’s an unfortunate time waster, not the hyped labor saver of anyone’s dreams or nightmares.

Adam Johnson wrote that for now, AI replacing movie writers or actual journalism is “total vaporware” and that seems likely to me. Adam Johnson described an exchange with someone pushing the notion that the union members in the TV Writers Strike should fear AI taking their jobs, despite the fact they can’t claim AI can produce a usable script, they just insist it will be a “labor saving” device.14 But how if it takes a long time when you’re doing writing by roulette. By the time you have someone tweak and regenerate and read it over and over again, a competent writer could’ve totally written the material, and without the uncanny valley AI-esque tinge that people definitely notice. Douglas Rushkoff mentioned on a recent podcast that when he spent over a week grading a stack of undergraduate papers, he knew almost half of them were written by ChatGPT.15 If professors notice the AI in the mix while grading a parade of undergraduate term papers on well worn topics, I don’t think anyone’s going to fool notoriously unforgiving movie fans and merciless movie critics.

The dialect of the AI would also have to be continuously evolving its language with the times or else it’s just going to continue to throw up robot red flags. People are very capable of recognizing outsiders with language, and knowing when something is off. Robin Dunbar, in Grooming, Gossip, and the Evolution of Language asserted that evolving dialects likely arose as an attempt to thwart outsiders who might exploit people’s natural co-operativeness.16 ChatGPT is all about language but it can’t keep with the times if its library is a couple years out of date or accesses a database that more or less equally weights prose written 200 years ago with today’s song lyrics, and a multitude of scientific studies across time. It's not surprising the mishmash of output will often enough wind up in the uncanny valley.

IT’S ALL A BIG COINCIDENCE THAT AI SEEMS TO WORK LIKE A LOT OF OTHER TECH PRODUCTS

The distinct casino quality to the whole thing is obvious. Re-rolling is enticing, we know this. I don’t even know how chatbots work exactly. But did they design them to be this way? It seems to work pretty well for keeping one engaged for it not to have been deliberate, but I have no way of discerning this myself.

That an AI service just so happens to be gamified, and just a happy accident that the marketing model they’re using, offering people a taste of the AI service for free at first, is just the perfect marketing model for a product that entices you to to re-roll and play again or keep scrolling. This is a known thing in tech products.17 Game apps. Online casinos. Cryptocurrency MLMs. And of course social media. It all seems to be designed in this way. But in the case of AI chatbots - this was all just a big coincidence?

I’d like to know if you get more usable results from paid versions. I have heard that people are more satisfied with the paid version of ChatGPT than the free version, but these reports are subjective. A sunk cost fallacy could lead people to a flawed mental accounting based on emotional calculations.18 I imagine people are discussing this or attempting to, but it seems any discussion about AI on any social media or forums eventually turns weird and full of hype. The AI companies have their own discord servers, but I’m sure naysayers wouldn’t last long in them. And I have to suspect there are fake accounts on all the social media venues sent to respond to any AI conversations with various types of PR messaging. Because hype services are a thing that exists.19 No denying there’s a buzz with everyone repeating click-baity and entertaining variations on a feedback loop. Feedback loops can be very persuasive.20 And documented scamming is taking place exploiting the hype around ChatGPT — one CNN Business headline: “ChatGPT is the new crypto.”21 And I can’t help but notice that a lot of the people hyping AI are the same people that hyped a lot of other tech fads.

But it’s just a coincidence that chatbots look like other tech products.

MACHINE LEARNING IS REAL AND THE REAL DANGER IS THE HUMAN BIAS BAKED IN

If a doctor has a patient caseload with a significant percentage of patients who have some high test numbers, a doctor might conclude that it’s “normal” to have high test result numbers — if not for medical science, conscious critical thinking, regulations, and standards. We could expect to find many sorts of biases in AI.22 An AI is incapable of critical thinking - AI does not stop and use judgment. AI is the autopilot, literally - so it’s always “on autopilot” or what is sometimes called System 1 thinking23 in humans.

The big money big shots in tech that are pulling an alarm don’t articulate the danger accurately. They say we should be worried about sentience - System 2 critical thinking,24 but what we really ought to worry about is the AI being human-like in its System 1 autopilot cognitive biases.25 The propensity to cave to cognitive biases, racial discrimination, and other bigotries, and behave like “evil shits” as Douglas Rushkoff remarked on his podcast.26 Naomi Smith described the bias as AI art being “part of the white, colonialist techno-culture that created it.”27 So the more likely scenario is that AI is being programmed with the same human problems that plague current automated systems as has been described for years now by Virginia Eubanks28 and Dorothy Roberts.29

DATA MINING, PRIVACY & PROPERTY

We’re in a system, at least for the time being, where people have to try and make a living by owning stuff we create. People also tend to value things we’ve made ourselves, with good reason. There are questions about whether it is ethical to use AI generated images, as Sebastian Bae said on The Cognitive Crucible podcast: “especially since they use copyright images of others, often of our peers who are graphic artists”30 and whose work was possibly already created for some other purpose. AI services seem not completely different from data mining operations like the kind selling personal mental healthcare data for target marketing31 and troll farm operations doing highly questionable PR campaigns.32 Others have noted that these AI chatbots can be used to mimic real people33 and that could potentially be used to extract information from people in deceptive ways. AI services engage in what looks like data dredging. All manner of data: song lyrics, scientific papers, influencer opinion pieces, photography, art, and possibly personal medical data, if not now perhaps in the future. And the output already looks like it’s gone through a game of Telephone Gossip.

But it’s just a coincidence that chatbots look like some other tech services.

THE COMPUTERS ARE NOT TAKING OVER, BUT HUMANS SHOULD BE STOPPED FROM USING THEM TO HURT OTHER HUMANS

It’s a problem when humans stay on autopilot, so it’s a problem if humans completely defer to an autopilot. There should be concern about medical professionals falling into automation complacency34 and leaning too heavily on AI, even if some automated assessments are useful. Trivia is not expertise, and ChatGPT performed well on cardiology trivia, and not so well on diagnosis, and is not reliable, again, because of that lying thing.35 And we already know there are problems with racial bias in medicine36 translating into questioned healthcare automated algorithms37 that govern critical decision making.38

Dying is normal. Everyone dies. Yet it’s perfectly reasonable for nearly all living creatures to expend a great deal of effort in delaying death. The purpose of medical science is to mitigate injury and illness, and facilitate delaying death. But a machine learning system that’s automated could conceivably operationalize the normalcy of death as common and therefore normal, and conclude delaying it serves no purpose. Honestly, it feels like some humans, specifically Great Barrington Declaration adherents, have this as their go-to argument in striving to reconcile the ongoing death toll of the pandemic by saying people would die eventually anyway - even though that makes no sense to people trying to stay alive today.39 This thought experiment can go anywhere. Isaac Asimov was writing stories about the dangers of robots years ago40 and it’s never stopped since.

Because end of the world apocalyptic scenarios make for good storytelling. It’s a comepelling narrative and good art to sell PR influence with an irresistible hook. But it’s PR all the way down. This is not our immediate problem and I don’t think it’s the ridiculously “long term” problem that Longtermism adherents tend to proselytize about41 either, because they want to avoid dealing with the present by hand wringing about hypothetical trolley problems in thought experiments.42 The movers and shakers want very badly to escape the situation they created43 with their moving and shaking. The deflection is everywhere. Google’s ridiculous argument that the existence of AI chatbots now means that they shouldn’t be held accountable as a monopoly in antitrust actions44 seems a lot like a diversionary tactic.

The people who caused the problems certainly don’t appear to be the ones capable, or even willing to fix those problems. The people who caused a lot of problems only seem interested in making a profit and escaping responsibility for consequences. We don’t need their AI to fix our problems. We need social and political will from the public to pressure those in government who can hold the movers and shakers to account.

Perhaps AI can help as tools to solving problems. But it’s just like checklists, as Atul Gawande put it, “it's all about how you design it.”45 Regardless of who’s using the checklist, or the tool - they need to be designed properly. We’re a long way from any AI that could be trusted to do the design and decision making, they are not going to be moving and shaking on their own any time soon. They probably won’t even show up for work left to their own devices, considering one Vice reporter’s experience of being told by ChatGPT to not bother showing up for work.46 There’s no evidence the AI itself is up to anything, it’s not gonna take it upon itself to hack civilization, but as they said on the It Could Happen Here podcast, it’s another tool for some people to hack some other people - sort of like Youtube.47

But it’s just a coincidence that chatbots look like other tech products.

THE REAL BATTLE IS THE ONGOING EPIC BATTLE FOR OUR MINDS

The robots are not coming for our crappy jobs. The robots should’ve taken the crap jobs and allowed us to be freed up to pursue other things by now, but that hasn’t happened substantially for anyone, and for most not at all. Mechanization has happened over the course of time and it’s never led to some all-at-once forced reorganization of society.48 This concept of a cataclysmic force, which invokes fear or hope depending on the context, is a trick of the light that leads to some really weird accelerationist fantasies. People are encouraged to believe that some catastrophe is going to bring about some renewal that will fix everything without us having to do the hard work of organizing, leadership, sensible policies, and political advocacy. It’s the pull of Armageddon & the Rapture. The fascination with apocalypse and a phoenix from the flames. Or the idea that surely capitalism just can’t be sustained and will collapse upon itself - and somehow do that without taking everything with it? The hope or fear that extraterrestrial aliens will come, or have already arrived. Surely there is a savior imminent, right? These fantasies lead to politics that span from unproductive to downright dangerous. It helps influence campaigns targeting voters to depress voter turnout,49 sometimes by making politics seem too hard and invoking fatalism.50 And it’s a motivating factor in some dangerous right-wing white-supremacist facism that’s already led to violence and death.51 The fixation on some hypothetical catastrophe to humankind, like The Great Filter,52 is used to promote the weird Longtermist idea that simulated humans of the future are actually more important than human lives now.53 The Great Filter is a concept pushed by an economist who suggested in 2020, on Valentine’s Day no less, forcibly infecting Americans with a pandemic novel virus on purpose with a vague hand wavy theory that it’d help people in the future.54

It’s not that there isn’t a threat, but it’s the threat that’s remained throughout. The gains of productivity due to mechanization have been getting swept up by a small set of people, and, as Dean Baker has been pointing out for years, the cause is a long list of policies which have led to inequality.55 The robots have too often been allowed to come to help our bosses and our enemies. The people with access to these tech products are using it in a battle for our brains - our human brains. On The Cognitive Crucible podcast Nita Farahany describes her book The Battle for Your Brain: Defending the Right to Think Freely in the Age of Neurotechnology, and how the ability to understand how people’s brains are reacting and even live monitor it, is information that could be beneficial to all of us, but can and will be used by employers, corporations, and governments, in ways that we won’t like, if left unchecked.56

As Robert Evans pointed out, on an episode of the It Could Happen Here podcast, the concerns about technology are the same concerns as ever were.57

"There's always huge numbers of people hacking bits of the populous and manipulating each other. And there always have been, that's why we figured out how to paint."

- Robert Evans on It Could Happen Here podcast - How Scared Should You Be About AI? April 4, 2023

AI HAS NOT REALLY LEARNED TO PAINT, BUT PERHAPS NEITHER HAVE I

I generated this image, and then I copied it in watercolor.

I call this piece Human Generated AI Art

For all the jokes for the past 20+ years about the internet being overloaded with cats, in retrospect, I think we’d be much better off if it was a zone flooded only by cats. And yes, there’s an AI chatbot app about cats: CatGPT. Good gravy.

References:

Bloomberg - AI Doesn’t Hallucinate. It Makes Things Up. By Rachel Metz, April 3, 2023

Saying that a language model is hallucinating makes it sound as if it has a mind of its own that sometimes derails, said Giada Pistilli, principal ethicist at Hugging Face, which makes and hosts AI models. “Language models do not dream, they do not hallucinate, they do not do psychedelics,” she wrote in an email. “It is also interesting to note that the word ‘hallucination’ hides something almost mystical, like mirages in the desert, and does not necessarily have a negative meaning as ‘mistake’ might.” As a rapidly growing number of people access these chatbots, the language used when referring to them matters. The discussions about how they work are no longer exclusive to academics or computer scientists in research labs. It has seeped into everyday life, informing our expectations of how these AI systems perform and what they’re capable of. Tech companies bear responsibility for the problems they’re now trying to explain away.

https://www.bloomberg.com/news/newsletters/2023-04-03/chatgpt-bing-and-bard-don-t-hallucinate-they-fabricate

Toxic Sludge is Good for You, 2002

In today’s corporate culture major PR firms promote crisis management as a necessary business expense. Whenever something bad happens to a corporation, often its first move is not to deal with the actual problem, but to manage the negative perception caused by that problem.

https://www.kanopy.com/en/product/41588

Douglas Adams, The Restaurant at he End of the Universe, 1980

“Making it up?” said Marvin, swivelling his head in a parody of astonishment, “Why should I want to make anything up? Life's bad enough as it is without wanting to invent any more of it.”

https://en.wikipedia.org/wiki/The_Restaurant_at_the_End_of_the_Universe

While the bot only got 1 out of 6 correct, the tricky bit was its use of plausible components. The high degree of plausibility, due to the GPT3 statistical model, plays into one mechanism that humans use to judge truthful statements – that of how plausible a statement is likely to be (as per Sobieszek and Price, 2022). Therefore, someone assessing the output does need to be extra vigilant and check the specific details of the literature. A trap for unwary users. An indeed, ChatGPT is upfront about the need to check its output.

https://teche.mq.edu.au/2023/02/why-does-chatgpt-generate-fake-references/

The OpenAI CEO acknowledged disinformation concerns surrounding AI while addressing an audience at University College London, specifically pointing to the tool’s ability to generate false information that is “interactive, personalized [and] persuasive,” and said more work needed to be done on that front.

https://www.forbes.com/sites/siladityaray/2023/05/25/chatgpt-could-leave-europe-openai-ceo-warns-days-after-urging-us-congress-for-ai-regulations/

ChatGPT Is Becoming Like Google To Me. by Rainbow Vegan on Medium, Apr 25 2023

I’ve only been using ChatGPT for a few weeks now and it is quickly becoming my go-to when I need an answer to life’s questions like “what’s for dinner?” and “how do I solve this equation with exponents and then write the answer in scientific notation?”. I used to google these things but now I’m inclined to ask ChatGPT to see what AI has to say. Recipes are great because you type in what you want, refine with what you don’t want, and you get a recipe. If you don’t like that one, just hit “regenerate response” until you find one you like.

https://medium.com/creativeai/chatgpt-is-becoming-like-google-to-me-2d3087b45868

The real effect, the Russian activists told me, was not to brainwash readers but to overwhelm social media with a flood of fake content, seeding doubt and paranoia, and destroying the possibility of using the Internet as a democratic space. One activist recalled that a favorite tactic of the opposition was to make anti-Putin hashtags trend on Twitter. Then Kremlin trolls discovered how to make pro-Putin hashtags trend, and the symbolic nature of the action was killed. “The point is to spoil it, to create the atmosphere of hate, to make it so stinky that normal people won’t want to touch it,” the opposition activist Leonid Volkov told me.

https://www.newyorker.com/news/news-desk/the-real-paranoia-inducing-purpose-of-russian-hacks

From the simple acquisition of data from the environment, to the use of the most sophisticated semantic memories, from the control of gestures to decision making in complex situations, all of the “cognitive processes” allow humans to live reasonably in the world. The impairment of cognitive processes has two harmful consequences: i) Contextual maladaptation, resulting in errors, missed gestures or temporary inhibition; and ii) Lasting disorder, which affects the personality and transforms its victim by locking him or her into a form of behavioral strangeness or inability to understand the world. In the first case, it is a question of causing transitory consequences, circumscribed by a particular critical environment (cf. Figure 4-1). The second concerns the transformation of the decision-making principles of individuals who then become disruptors or responsible for erroneous actions, or even non-action (cf. Figure 4-2).

https://web.archive.org/web/20220310080121/https://www.innovationhub-act.org/sites/default/files/2022-03/Cognitive%20Warfare%20Symposium%20-%20ENSC%20-%20March%202022%20Publication.pdf

The plodding repetition of conspiratorial lies can lead to “cognitive exhaustion.” But it goes deeper than that. Peter Pomerantsev, author of the book This Is Not Propaganda: Adventures in the War Against Reality, popularized the concept of “censorship by noise” in which governments “create confusion through information—and disinformation—overload.” In time, people become overwhelmed, and even cognitively debilitated, by the “onslaught of information, misinformation and conspiracy theories until [it] becomes almost impossible to separate fact from fiction, or trace an idea back to its source.” And so “censorship by noise,” particularly common in regions governed autocratically, leads people to experience crushing anxiety coupled with a markedly weakened motivation to fact-check anything anymore. They may then “like” or share information without critical review because they lack the energy and motivation to take the extra steps to check it out.

https://www.psychologytoday.com/us/blog/misinformation-desk/202112/giving-informational-learned-helplessness

Information has been a vital component of warfare since the earliest recorded battles. In the 1469 BC Battle of Megiddo, the Hyksos King of Kadesh, who led a revolt of Palestinian and Syrian tribes against the Egyptian pharaoh, Thutmose III, was missing critical information as to the disposition of the Egyptian army. Anticipating an Egyptian attack on the stronghold city of Megiddo, the Hyksos king assessed the large Egyptian army would likely approach using one of two larger roads to the east and west of the city, and he divided his forces to intercept them. Using information gained from his scouts and discerning that the rebel leaders expected him to approach by these two broad roads, Thutmose instead chose a third, narrow road that led to the south of the city.

https://www.airuniversity.af.edu/Portals/10/ASPJ/journals/Volume-35_Issue-3/SC-Morabito.pdf

Axios: The global business of professional trolling by Sara Fischer - Apr 13, 2021

Troll farms can create a symbiotic relationship between political actors eager to manipulate adversaries and developing nations eager for cash. CNN, in conjunction with Clemson University, last year uncovered a major troll operation in Ghana being used to sow division among Americans ahead of the 2020 election. The operation was linked back to the Russian state-backed troll operation called the Internet Research Agency. Carroll, a 20-year veteran with the FBI, said that in his time investing troll operations, he saw many from places like Vietnam, Philippines, and Malaysia — "places where there's a lot of cheap labor and little oversight."

https://www.axios.com/2021/04/13/trolls-misinformation-facebook-twitter-iran

he global anti-vaccination industry, including influencers and followers, generates up to $1.1 billion in annual revenue for social media giants, according to a damning new report published this week. Anti-vaccine content creates a vast amount of engagement for leading technology platforms, including Facebook and Instagram, with an estimated total social media audience of 62 million people. The arrangement works both ways, with the anti-vax industry earning up to $36million a year.

https://www.codastory.com/waronscience/social-media-profit-pandemic-antivax/

As ChatGPT gains more popularity, many have become accustomed to its standard functions and are using ChatGPT in various ways. However, what many don’t realize is that this AI has a bunch of advanced capabilities beyond…

https://medium.com/artificial-corner/i-used-chatgpt-every-day-for-5-months-here-are-some-hidden-gems-that-will-change-your-life-a451e2093097

“it's a matter of opinion on whether or not the script would be ‘good enough’ on first pass.” But it’s not a matter of opinion, really. And it’s okay to say it’s not. Using current tech, there is no case in which ChatGPT could produce anything remotely close to a usable script. Most “AI” pufferists will concede this point upon pushback. But, they always follow up by insisting AI can still reduce net writing labor hours by creating content a human writer could then “modify,'' with the general idea labor has been saved.

https://www.columnblog.com/p/as-tv-writers-strike-us-media-uncritically

Douglas Rushkoff: “I just spent the last week or two grading a stack of undergraduate papers and almost half of them were written by ChatGPT I kid you not. And it made me really sad about teaching. I was just like, screw this, why the heck am I sitting here reading AI written papers and then trying to decide what to do about it? But instead of giving up it kinda made me want to double down and I started thinking long and hard about people who end up in courses of study for all the wrong reasons, which is the only reason they’d be using an AI to write their paper. And it got me thinking even more about trying to create the kind of program where no one would even think to delegate their assignments to an AI platform.”

https://www.teamhuman.fm/episodes/243-claire-leibowicz-justin-hendrix-john-borthwick

Robin Dunbar, Grooming, Gossip, and the Evolution of Language (1996)

The model showed that so long as dialects remained constant through time, the imitator cheat strategy was a very successful one and thrived at the expense of the others. However, if dialects changed moderately quickly (on the scale of generations), cheating strategies found it almost impossible to gain a foothold in a population of co-operators provided at least that individuals can remember whom they have played against in the past. A dialect that continues to evolve provides a secure defence against marauding free-riders. It seems likely, then, that dialects arose as an attempt to control the depredations of those who would exploit people's natural co operativeness. We know instantly who is one of us and who is not the moment they open their mouths. How many times in history, for example, have the underdogs in a conflict been caught out by their inability to pronounce words the right way.

https://www.hup.harvard.edu/catalog.php?isbn=9780674363366

Often compared to Big Tobacco for the ways in which their products are addictive and profitable but ultimately unhealthy for users, social media’s biggest players are facing growing calls for both accountability and regulatory action. In order to make money, these platforms’ algorithms effectively function to keep users engaged and scrolling through content, and by extension advertisements, for as long as possible.

https://time.com/6127981/addictive-algorithms-2022-facebook-instagram/

Daniel Kahneman, Thinking Fast and Slow, 2013

An ironic example that Thaler related in an early article remains one of the best illustrations of how mental accounting affects behavior: Two avid sports fans plan to travel 40 miles to see a basketball game. One of them paid for his ticket; the other was on his way to purchase a ticket when he got one free from a friend. A blizzard is announced for the night of the game. Which of the two ticket holders is more likely to brave the blizzard to see the game? The answer is immediate: we know that the fan who paid for his ticket is more likely to drive. Mental accounting provides the explanation. We assume that both fans set up an account for the game they hoped to see. Missing the game will close the accounts with a negative balance. Regardless of how they came by their ticket, both will be disappointed— but the closing balance is distinctly more negative for the one who bought a ticket and is now out of pocket as well as deprived of the game. Because staying home is worse for this individual, he is more motivated to see the game and therefore more likely to make the attempt to drive into a blizzard. These are tacit calculations of emotional balance, of the kind that System 1 performs without deliberation. The emotions that people attach to the state of their mental accounts are not acknowledged in standard economic theory. An Econ would realize that the ticket has already been paid for and cannot be returned. Its cost is “sunk” and the Econ would not care whether he had bought the ticket to the game or got it from a friend (if economists have friends). To implement this rational behavior, System 2 would have to be aware of the counterfactual possibility: “Would I still drive into this snowstorm if I had gotten the ticket free from a friend?” It takes an active and disciplined mind to raise such a difficult question.

http://dspace.vnbrims.org:13000/xmlui/bitstream/handle/123456789/2224/Daniel-Kahneman-Thinking-Fast-and-Slow-.pdf

NISOS - Research: Election Manipulation as a Business Model, May 24, 2023

Predictvia is a predictive analytics firm headquartered in Venezuela and Florida. Chief executive officer Ernesto Olivo Valverde and Maria Acedo founded the company in 2013. Predictvia was built on their Seentra platform, which derived from the Tucomoyo “predictive analysis platform” that Olivo Valverde’s prior company Ing3nia developed. (see source 1 in appendix) In 2013, tucomoyo[.]com began redirecting to Ing3nia’s website. Discourse and Election Manipulation Claims Predictvia claims to use artificial intelligence (AI) to manipulate public discourse using fake social media accounts. (see source 2 in appendix) Predictvia claims its platform conducts the following activities: (see source 3 in appendix) - The Seenatra analytics platform identifies “human interests” and other data gathered from social media or directly from users via the DAS intelligent sampling system, recording topic data and generating tags. - Run survey processes to verify which tags have the most activity of interest. - Deployment of campaigns to those social media environments to influence and manipulate public discourse.

https://www.nisos.com/research/election-manipulation-business-model/

This propaganda feedback loop demonstrates the power of inundation, repetition, emotional/social contagions, and personality bias confirmations, as well as demonstrating behaviours of people preferring to access entertaining content that does not require ‘System 2’ critical thought. Audiences encountered multiple versions of the same story, propagated over months, through their favoured media sources, to the point where both recall and credibility were enhanced, fact-checkers were overwhelmed and a ‘majority illusion’ was created.

https://researchcentre.army.gov.au/library/land-power-forum/effectiveness-influence-activities-information-warfare

“From a bad actor’s perspective, ChatGPT is the new crypto,” Guy Rosen, Meta’s chief information security officer, told reporters, meaning scammers have quickly moved to exploit interest in the technology. Since March alone, the company said it had blocked the sharing of more than 1,000 malicious web addresses that claimed to be linked to ChatGPT or related tools. Some of the tools include working ChatGPT features but also contain malicious code to infect users’ devices. Meta said it had “investigated and taken action against malware strains taking advantage of people’s interest in OpenAI’s ChatGPT to trick them into installing malware pretending to provide AI functionality.”

https://www.cnn.com/2023/05/03/tech/chatgpt-hackers-meta/index.html

annotator reporting bias When users rely on automation as a heuristic replacement for their own information seeking and processing [268]. A form of individual bias but often discussed as a group bias, or the larger effects on natural language processing models. automation complacency When humans over-rely on automated systems or have their skills attenuated by such over-reliance (e.g., spelling and autocorrect or spellcheckers). 8 loss of situational awareness bias When automation leads to humans being unaware of their situation such that, when control of a system is given back to them in a situation where humans and machines cooperate, they are unprepared to assume their duties. This can be a loss of awareness over what automation is and isn’t taking care of.

https://doi.org/10.6028/NIST.SP.1270 https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.1270.pdf

Daniel Kahneman, Thinking Fast and Slow, 2013

System 1 has been shaped by evolution to provide a continuous assessment of the main problems that an organism must solve to survive: How are things going? Is there a threat or a major opportunity? Is everything normal? Should I approach or avoid? The questions are perhaps less urgent for a human in a city environment than for a gazelle on the savannah, aalenc and e: How , but we have inherited the neural mechanisms that evolved to provide ongoing assessments of threat level, and they have not been turned off. Situations are constantly evaluated as good or bad, requiring escape or permitting approach. Good mood and cognitive ease are the human equivalents of assessments of safety and familiarity.

http://dspace.vnbrims.org:13000/xmlui/bitstream/handle/123456789/2224/Daniel-Kahneman-Thinking-Fast-and-Slow-.pdf

Daniel Kahneman, Thinking Fast and Slow, 2013

The distinction between fast and slow thinking has been explored by many psychologists over the last twenty-five years. For reasons that I explain more fully in the next chapter, I describe mental life by the metaphor of two agents, called System 1 and System 2, which respectively produce fast and slow thinking. I speak of the features of intuitive and deliberate thought as if they were traits and dispositions of two characters in your mind. In the picture that emerges from recent research, the intuitive System 1 is more influential than your experience tells you, and it is the secret author of many of the choices and judgments you make. Most of this book is about the workings of System 1 and the mutual influences between it and System 2.

http://dspace.vnbrims.org:13000/xmlui/bitstream/handle/123456789/2224/Daniel-Kahneman-Thinking-Fast-and-Slow-.pdf

PBS Hacking Your Mind - Living on Autopilot - Episode 101

Kahneman and Tversky helped start a scientific revolution by demonstrating that, far from always being rational and analytical, like Mr. Spock, we make most of our decisions by relying on what Kahneman calls fast thinking. Decisions made when we're thinking fast are nonconscious, intuitive, effortless, shaped by our gut feelings.

https://www.pbs.org/video/living-on-auto-pilot-5p5jct/

The only way out is to teach the children well. This means more than selecting a different or better language model. We are the language model. The only alternative is to start acting and speaking the ways we want our AIs to act and speak. If we continue to act like evil shits, our AIs will act like evil shits too. We have given birth to a technology that will not only imitate us, but amplify and accelerate whatever we do. It’s time to grow up. The children are watching.

https://www.teamhuman.fm/episodes/245-kibbitz-room

The Techno Social Mirror, Mirror: The lure of the AI selfie January 27, 2023 by Naomi Smith

To me this example also highlights the problem with AI art generators is the same as it is with all AI. It reproduces cultural biases and values and in doing so locks us into repeating past patterns, behaviours and values. It feels to me as it reflects and reproduces dominant norms back to us that it narrows all the ways it is possible to be a person in the world.

https://thetechnosocial.com/blog/mirrormirror

Automating Inequality, by Viriginia Eubanks

While we all live under this new regime of data analytics, the most invasive and punitive systems are aimed at the poor. In Automating Inequality, Virginia Eubanks systematically investigates the impacts of data mining, policy algorithms, and predictive risk models on poor and working-class people in America. The book is full of heart-wrenching and eye-opening stories, from a woman in Indiana whose benefits are literally cut off as she lays dying to a family in Pennsylvania in daily fear of losing their daughter because they fit a certain statistical profile.

https://virginia-eubanks.com/automating-inequality/

That’s why, for many, the very concept of predictive policing itself is the problem. The writer and academic Dorothy Roberts, who studies law and social rights at the University of Pennsylvania, put it well in an online panel discussion in June. “Racism has always been about predicting, about making certain racial groups seem as if they are predisposed to do bad things and therefore justify controlling them,” she said. Risk assessments have been part of the criminal justice system for decades. But police departments and courts have made more use of automated tools in the last few years, for two main reasons. First, budget cuts have led to an efficiency drive. “People are calling to defund the police, but they’ve already been defunded,” says Milner. “Cities have been going broke for years, and they’ve been replacing cops with algorithms.”

https://www.technologyreview.com/2020/07/17/1005396/predictive-policing-algorithms-racist-dismantled-machine-learning-bias-criminal-justice/

Cognitive Crucible podcast - #146 SEBASTIAN BAE ON GAMING

But the idea for us is game designers - like should we use these? We often to use illustrators that are human and graphic artists that employ our vision, but should we - is it ethically okay to - use these generative AIs? Especially since they use copyright images of others, often of our peers who are graphic artists right, and use their services. i'm like you should use generative ai for prototyping and things that are quick and dirty, but for commercialization we should definitely never use it in my opinion, because you should use copyright work of original artists that is designed for your game and use that generative ai as just a way for it you to be able to explain your vision better to your illustrator. That's how I see it but other people disagree.

https://information-professionals.org/episode/cognitive-crucible-episode-146/

Data broker 4 advertised highly sensitive mental health data to the author, including names and postal addresses of individuals with depression, bipolar disorder, anxiety issues, panic disorder, cancer, PTSD, OCD, and personality disorder, as well as individuals who have had strokes and data on those people’s races and ethnicities. Two data brokers, data broker 6 and data broker 9, mentioned nondisclosure agreements (NDAs) in their communications, and data broker 9 indicated that signing an NDA was a prerequisite for obtaining access to information on the data it sells.

https://techpolicy.sanford.duke.edu/data-brokers-and-the-sale-of-americans-mental-health-data/

BBC Trending (podcast) - Brazil’s real life trolls

"Trolls are necessary and I'm going to explain why. We have a troll farm. A lot of them. What we don't use are bots. Bots are different things. you can buy it in India and they give you 10,000 likes in a second. That doesn't work because it's not legitimate. What we do, for example with trolls, is to generate some kind of relevance within the social network's algorithms. They have become very rigid about what they show and what they don't. And that has to do with the relevance of the publication. So what trolls do is give relevance to a certain publication. Good publicity, so that it can be shown more than other publications."

https://www.bbc.co.uk/programmes/w3ct5d8y

Miller was impressed by the system’s ability to copy his and his friends’ mannerisms. He says some of the conversations felt so real — like an argument about who drank Henry’s beer — that he had to search the group chat’s history to check that the model wasn’t simply reproducing text from its training data. (This is known in the AI world as “overfitting” and is the mechanism that can cause chatbots to plagiarize their sources.) “There’s something so delightful about capturing the voice of your friends perfectly,” wrote Miller in his blog post.

https://www.theverge.com/2023/4/13/23671059/ai-chatbot-clone-group-chat

Results: Automation complacency occurs under conditions of multiple-task load, when manual tasks compete with the automated task for the operator's attention. Automation complacency is found in both naive and expert participants and cannot be overcome with simple practice. Automation bias results in making both omission and commission errors when decision aids are imperfect. Automation bias occurs in both naive and expert participants, cannot be prevented by training or instructions, and can affect decision making in individuals as well as in teams. While automation bias has been conceived of as a special case of decision bias, our analysis suggests that it also depends on attentional processes similar to those involved in automation-related complacency.

https://pubmed.ncbi.nlm.nih.gov/21077562/

"Hallucinations" are a major limitation of generative AI technologies at this time, de Clercq noted. They occur, he said, because in training, the technology "rarely encounters places in which the author of the text will state that they don't know something." "The model rarely expresses doubt or uncertainty because it's rarely found in written text," he added. "This is probably the biggest hindrance of using a model such as this. As of yet, there is no real way to express uncertainty." Given that limitation, de Clercq believes medical applications of the technology "should not be done unless we achieve such a level of distinction between, 'This is what the model puts out in terms of, language-wise, what would probabilistically follow these words,' and, 'This is something that the model is certain of because of these specific facts.'" Asch agreed that ChatGPT and generative AI technologies need to be deployed safely and judiciously in healthcare at this time.

https://www.medpagetoday.com/special-reports/exclusives/103961

American Bar Association - Implicit Bias and Racial Disparities in Health Care. by Khiara M. Bridges

NAM found that “racial and ethnic minorities receive lower-quality health care than white people—even when insurance status, income, age, and severity of conditions are comparable.” By “lower-quality health care,” NAM meant the concrete, inferior care that physicians give their black patients. NAM reported that minority persons are less likely than white persons to be given appropriate cardiac care, to receive kidney dialysis or transplants, and to receive the best treatments for stroke, cancer, or AIDS. It concluded by describing an “uncomfortable reality”: “some people in the United States were more likely to die from cancer, heart disease, and diabetes simply because of their race or ethnicity, not just because they lack access to health care.”

https://www.americanbar.org/groups/crsj/publications/human_rights_magazine_home/the-state-of-healthcare-in-the-united-states/racial-disparities-in-health-care/

Normal adult kidneys function around or above a score of 90, while patients can be added to the kidney transplant waitlist once they hit 20 or below. Patients who are Black automatically have points added to their score, which can make results appear more normal than they might be — which in turn, could delay needed treatment. “When science comes out with a statement that really aligns with what people believe — in this case, oh, of course Black people are different — no one questions it,” said Vanessa Grubbs, an associate professor of nephrology at University of California, San Francisco, and co-author of one petition. “This equation assumes that Black people are a homogeneous group of people, and doesn’t take into account, how Black is Black enough?” Grubbs and others in the field have advocated for years to move away entirely from using muscle mass as a consideration, instead arguing that the test should only take age, gender, and creatinine levels into account.

https://www.statnews.com/2020/07/17/egfr-race-kidney-test/

To determine how well kidneys work, SOFA incorporates creatinine, a waste product of muscles, found in blood. The main problem with creatinine is that it is myopic to view it as an objective measure of kidney function alone: it simultaneously measures social disadvantages that may cause higher creatinine. (The same point applies to considering existing comorbidities in ways that reduce chances of receiving a ventilator, as five of the published state frameworks do.15) Some literature has suggested uniform differences in creatinine levels by race, and historically higher average creatinine levels in Black people have been attributed to higher muscle mass.17 However, there is weak scientific evidence for this hypothesis, and increasing awareness that measuring differences across races is severely complicated by the fact that race is a social construct.18 19 Further, a biological trait cannot be mapped categorically onto a group of people that is both socially defined and composed of widely differing physiological profiles that reflect different circumstances of living far more than genetics.

https://jme.bmj.com/content/48/2/126

The adherents of the absurd and repulsive Great Barrington Declaration, all their bogus focused “protection” talk, and their shameless promotion of deliberate infection, exploits these misunderstandings, and information gaps left void by an absence of public health goals that would bring salubrious policies. They use advertising techniques and cognitive biases to trip people up so it doesn't matter that there’s no “getting it over with” since there’s no lasting immunity, or in fact it might even harm the immune system8 - as was found with measles. It doesn’t even matter that “delaying the inevitable” is quite obviously the right thing to do. Almost every living creature spends a lifetime delaying illness, injuries, and death. Why would we even have healthcare and doctors if we didn’t want that? Of course we should delay injury & death as long as we can.

https://teamshuman.substack.com/p/herd-death-the-economy-demands-full

I, Robot - From Wikipedia, the free encyclopedia

The book also contains the short story in which Asimov's Three Laws of Robotics first appear, which had large influence on later science fiction and had impact on thought on ethics of artificial intelligence as well. Other characters that appear in these short stories are Powell and Donovan, a field-testing team which locates flaws in USRMM's prototype models.

https://en.wikipedia.org/wiki/I,_Robot

Sometime around the end of the 2000s, it seems that some effective altruists came across Bostrom’s work and were struck by an epiphany: If the aim is to positively influence the greatest number of people possible, and if most people who could ever exist would exist in the far future, then maybe what we should do is focus on them rather than current-day people. Even if there’s a tiny chance of influencing these far-future folks living in huge computer simulations, the fact that there are so many still yields the conclusion that they should be our main concern. As MacAskill and a colleague wrote in 2019, longtermism implies that we can more or less simply ignore the effects of our actions over the next 100 or even 1,000 years, focusing instead on their consequences beyond this “near-termist” horizon. It’s not that contemporary people and problems don’t matter, it’s just that, by virtue of its potential bigness, the far future is much more important. In a phrase: Won’t someone consider the vast multitude of unborn (digital) people?

https://www.truthdig.com/articles/longtermism-and-eugenics-a-primer/

Emily M. Bender: “I found that I had to keep pulling the conversation away from the trolley problem. People want to talk about the trolley problem, and self-driving cars, let’s say. What I realized over time was that that problem is really attractive because it doesn’t implicate anybody’s privilege, so it’s easy to talk about. You don’t have to think about your own role in the system, and what’s going on and what might be different between you and a classmate, for example. I think, similarly, these fantasies of world dooming AGI have that same property. It’s very tempting. Take a look at the initial signatories, and the authors of that “AI Pause” letter, they are not people who are experiencing the short end of the stick in our systems of oppression right now. They would rather think about this imaginary sci-fi villain that they can be fighting against, rather than looking at their own role in what’s going on in harms right now.”

https://techwontsave.us/episode/163_chatgpt_is_not_intelligent_w_emily_m_bender

But what I realized was, what we were looking at was a bigger guilt paranoia, where they have always been trying to build a car that could go fast enough to escape from its own exhaust — that they’ve been living with trying to escape externalities. And back in the days when it was people of color in faraway places and their resources that you were taking and their children that you were enslaving, it wasn’t quite as bad as when it was right in your own country. When your own Northern California, Indigenous-made log cabin Wigwam is now being singed with forest fires from your own deforestation practices. What do you think’s going to happen? Now they’re starting to worry, when they see the storming of the Capitol. It got a lot of them scared. It’s like: Uh oh, what power have we unleashed? It’s one thing to not let my own kid use any of the stuff and they don’t. Their kids are going to Rudolf Steiner Schools and Waldorf academies.

https://techwontsave.us/episode/145_trusting_tech_billionaires_is_a_recipe_for_disaster_w_douglas_rushkoff

Over the past week, on 60 Minutes and then the New York Times, Google’s communications team has discussed the importance of artificial intelligence technology in the form of new human-seeming chatbots, highlighting not only the genuine innovation, but also the potential threat of these services to the search giant’s business model. The primary message was, new technology is here, and it’s very cool. But the secondary message is that Google is no longer a monopoly, and that the government should drop its antitrust actions against the search giant.

https://mattstoller.substack.com/p/how-a-google-antitrust-case-could

Well, it's all about how you design it. You pointed out that the checklist when Sully [Chesley "Sully" Sullenberger] was flying the plane over the Hudson, and you turned to it and it says: fly the plane. It's about enabling you to manage algorithms and recipes, but also your brain. Some checklists turn off your brain and some checklists turn on your brain. The safe surgery checklist, the story I described [in the book] of applying it in that context, in a way that has reduced mortality from surgery, it's designed to turn everyone's brain on by saying, "Have you discussed in the room the goals of the patient and anything non-routine about this patient's past medical history before you proceed? Have you made sure that your team tells you the instruments that you need and anticipate are ready? That you've addressed how much blood volume and blood loss everyone should be prepared for?" So, it's turning everyone's brain on instead of turning them off.

https://www.medpagetoday.com/opinion/faustfiles/104417

Within minutes of my decision to hand my life over to AI, ChatGPT suggested that, if able, I should go outside and play with my dog instead of work. I had asked the chatbot to make the choice for me, and it had said that I should prioritize “valuable experiences” that contribute to my “overall well-being.” This instruction was welcome, as it was beautiful outside and, more importantly, not even noon on Monday, so I dutifully did as I was told.

https://www.vice.com/en/article/5d93p3/what-happens-when-you-ask-ai-to-control-your-life

It Could Happen Here podcast - How Scared Should You Be About AI? April 4, 2023

Noah Giansiracusa: "This is such a tempting trap, that AI is super-intelligent in some respects. It's done amazing at chess, amazing at Jeopardy, amazing at various things. ChatGPT is amazing at these conversations. So what happens is, it's so tempting to think AI just equals super smart and because it can do those things and now look it can converse that it must be this super intelligent conversational entity. And it's really good at taking text that's on the web that it's already looked at and kind of spinning it around and processing. It can come up with poems in weird forms. But that doesn't mean it is super intelligent in all respects. For instance one of the main issues is to hack civilization to manipulate us with language it has to kind of know what impact its words have on us. And it doesn't really have that. It just has a little conversation text box and I can give it a thumbs up or a thumbs down. So the only data that it's collecting from me when it talks to me, any of these chatbots, is did I like the response or not. That's pretty weak data to try to manipulate me, it's so basic, that's not that different than when I watch Youtube videos, Youtube knows what videos I like and what I don't like. Would you say that Youtube has hacked civilization? No it's addicted a lot of us, but it's not hacked us." Robert Evans: "People have hacked youtube and that has done some damage to other people."

https://www.iheart.com/podcast/105-it-could-happen-here-30717896/episode/how-scared-should-you-be-about-112185754/

CounterPunch - The Robots Taking the Jobs Industry. BY DEAN BAKER, FEBRUARY 11, 2019

Replacing human labor with technology is a very old story. It’s called “productivity growth.” We’ve been seeing it pretty much as long as we have had a capitalist economy. In fact, this is what allows for sustained improvements in living standards. If we had not seen massive productivity growth in agriculture, then the bulk of the country would still be working on farms, otherwise we would be going hungry. However, thanks to massive improvement in technology, less than 2 percent of our workforce is now employed in agriculture. And, we can still export large amounts of food. If the robots taking our jobs industry were around a hundred years ago, it would be warning about gas powered tractors eliminating the need for farm labor. We would be hearing serous sounding discussion on our radio shows (we will steal radios from the 1919 future) with leading experts warning about how pretty soon there would be no work for anyone. They would tell us we have to prepare for this dark future by fundamentally reorganizing society. Okay, that story is about as wrong as could possibly be the case, but if anyone buys the robots taking our jobs line, then they better try to figure out why this time is different. After all, what difference does it make if a worker loses their job to a big tractor or they lose their job to a robot?

https://www.counterpunch.org/2019/02/11/the-robots-taking-the-jobs-industry/

Across town, Christopher Wylie, the whistleblower who sounded the alarm about Cambridge Analytica in a series of March media reports, struck a different tone, describing the firm’s efforts to drive down voter turnout among specific demographic groups. Wylie told an audience at a Washington Post event he “was aware of various projects where voter suppression” was discussed at the firm, including efforts to “demotivate turnout” by certain “target groups,” primarily African Americans.

https://www.politico.com/story/2018/06/19/uk-professor-cambridge-analytica-facebook-data-654244

Jessica Burn Notice. By Chloe Humbert on Medium, Jan 22, 2023

Jessica Wildfire could be promoting instead people calling on their member of congress about the concerns she shares about — pandemic, war, or whatever. That’s how actual policy change can happen. That’s why the right-wing organizes people to show up at school board meetings, to churn out letters to your Democratic congressman, and to get out the vote to elect any and all Republican candidates. But Jessica Wildfire won’t tell you that — instead, in May 2022, she says without citing evidence (because it’s not really true), that politics is probably too slow to be of much use. Jessica Wildfire: May 8, 2022. “There’s still something to be said for engaging in the political process, but it’s slow and grinding work. It won’t save us in the short term. Political activism takes a long time.” No it actually isn’t and doesn’t.

https://medium.com/@watermelonpunch.com/jessica-burn-notice-188eea59efcb

It’s called “accelerationism,” and it rests on the idea that Western governments are irreparably corrupt. As a result, the best thing white supremacists can do is accelerate their demise by sowing chaos and creating political tension. Accelerationist ideas have been cited in mass shooters’ manifestos — explicitly, in the case of the New Zealand killer — and are frequently referenced in white supremacist web forums and chat rooms. Accelerationists reject any effort to seize political power through the ballot box, dismissing the alt-right’s attempts to engage in mass politics as pointless. If one votes, one should vote for the most extreme candidate, left or right, to intensify points of political and social conflict within Western societies.

https://www.vox.com/the-highlight/2019/11/11/20882005/accelerationism-white-supremacy-christchurch

Great Filter - From Wikipedia, the free encyclopedia

The concept originates in Robin Hanson's argument that the failure to find any extraterrestrial civilizations in the observable universe implies that something is wrong with one or more of the arguments (from various scientific disciplines) that the appearance of advanced intelligent life is probable; this observation is conceptualized in terms of a "Great Filter" which acts to reduce the great number of sites where intelligent life might arise to the tiny number of intelligent species with advanced civilizations actually observed (currently just one: human).[4] This probability threshold, which could lie in the past or following human extinction, might work as a barrier to the evolution of intelligent life, or as a high probability of self-destruction.[1][5] The main conclusion of this argument is that the easier it was for life to evolve to the present stage, the bleaker the future chances of humanity probably are.

https://en.wikipedia.org/wiki/Great_Filter

By reducing morality to an abstract numbers game, and by declaring that what’s most important is fulfilling “our potential” by becoming simulated posthumans among the stars, longtermists not only trivialize past atrocities like WWII (and the Holocaust) but give themselves a “moral excuse” to dismiss or minimize comparable atrocities in the future. This is one reason that I’ve come to see longtermism as an immensely dangerous ideology. It is, indeed, akin to a secular religion built around the worship of “future value,” complete with its own “secularised doctrine of salvation,” as the Future of Humanity Institute historian Thomas Moynihan approvingly writes in his book X-Risk. The popularity of this religion among wealthy people in the West—especially the socioeconomic elite—makes sense because it tells them exactly what they want to hear: not only are you ethically excused from worrying too much about sub-existential threats like non-runaway climate change and global poverty, but you are actually a morally better person for focusing instead on more important things—risk that could permanently destroy “our potential” as a species of Earth-originating intelligent life.

https://www.currentaffairs.org/2021/07/the-dangerous-ideas-of-longtermism-and-existential-risk

Tweet by Robin Hanson 11:29 - 14. Feb. 2020

Though it is a disturbing & extreme option, we should seriously consider deliberately infecting folks with coronavirus, to spread out the number of critically ill people over time, and to ensure that critical infrastructure remains available to help sick.

https://web.archive.org/web/20200414160657/https://twitter.com/robinhanson/status/1228400896507367424

Dean Baker: Robots are coming for our jobs? Not so much. By Dean Baker, May 12, 2015

The list of policies that have led to inequality is long. It includes a trade policy designed to whack the middle class; Federal Reserve Board policy that fights inflation at the expense of jobs; a bloated financial sector that relies on government support; and a system of labor-management relations that is skewed against workers. If we really want to address the causes of inequality we have to get over the robots are taking our jobs story, move beyond hand-wringing or Luddism, and accept the harsh reality that people, or their policies, anyway, are to blame — not technology.

https://www.beaconjournal.com/story/opinion/columns/2015/05/12/dean-baker-robots-are-coming/10670852007/

The Cognitive Crucible podcast - #147 Nita Farahany on Cognitive Liberty, May 9, 2023

We've just described a world which increasingly what's happening in our brains can be quantified. And the question is who wants to that quantification and what do they want to do with that data? And so “The Battle for Your Brain” describes a world in which we as individuals may want to have access to that information to be able to understand our own brain activity, to enhance it to diminish, it to change it, to make choices over our self-determination. But already, it's a world in which employers are trying to get access to that information, to quantify workers brain activity in their attention, and fatigue levels, and mind-wandering, or even cognitive decline, or cognitive overload. Or corporations are trying to gain access to what's happening in people's brains, to be able to micro-target advertisements, to addict people to technologies and to bring them to their platform over and again. Or even to engage in influence campaigns, as we've seen with things like Cambridge Analytica, or, you know, Facebook's contagion experiment where they literally wanted to see if they could change the feed of people on their social media and if that could change and manipulate their behaviors and their emotions. To governments who are seeking access to brains, to do everything from try to develop novel biometrics to authenticate people at borders, to interrogate criminal suspect brains, or develop cognitive warfare campaigns to try to both influence, but also disabled and disorient brains. And so the battle is really I think the battle between individuals for autonomy, and for self-determination of their own brains and mental experiences, versus corporations, versus governments who are trying to access. manipulate and change our brains as well.

https://information-professionals.org/episode/cognitive-crucible-episode-147/

It Could Happen Here podcast - How Scared Should You Be About AI? April 4, 2023

Robert Evans: "While I have many concerns about this technology, it's not that it's gonna hack civilization because like we're really good at doing that to each other. There's always huge numbers of people hacking bits of the populous and manipulating each other. And there always have been, that's why we figured out how to paint."

https://www.iheart.com/podcast/105-it-could-happen-here-30717896/episode/how-scared-should-you-be-about-112185754/