Notes & Transcript: https://chloehumbert.substack.com/p/troll-farms-sock-puppets-and-botnets

The Internet of Fakes — PR Tactics, Troll Farms, Sock Puppets, Botnets, Influencers, Operatives, & Chaos Agents

The media landscape is an information battlefield, and particularly the social media landscape. It is filled with PR, advertising, targeted marketing, propaganda, high pressure sales, and influence operations. This is documented fact, supported by evidence, with a financial ecosystem, well funded by corporations, state actors, and a plethora of business…

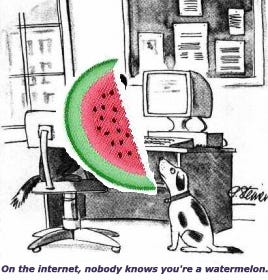

People argue that surely it’s not deliberate, that it’s just regular weird people being foolish, or that it’s just a few bad actors, or that it’s all a big coincidence. Some demand proof, and once given evidence, demand more proof, and people sometimes still demand to know - but why? Claim wrongly this is just speculation. Even with tons of scientific publications, academic lectures, military documentation, and trade news detailing it all. The problem is that people don’t want to believe this information attack is happening, or especially that we may have been fooled by something. Because we all have been fooled by disinformation, marketing, and PR, at some point, and none of us want to think about that too much. And that’s a big part of why it works so well.

Rand Waltzman: “In a cognitive attack the whole point is that the target shouldn’t know they’re being attacked in order for it to be really effective. So that's the whole trick to keep the target unaware because if the target becomes aware that they’re being attacked in this way, just by them becoming aware it significantly reduces the effect of the attack.”

Cyber criminals use social media botnets to disseminate malicious links, collect intelligence on high profile targets, and spread influence. As opposed to traditional botnets, each social bot represents an automated social account rather than an infected computer. This means building a legion of interconnected bots is much quicker and easier than ever before, all accessible from a single computer. The person commanding the botnet, also known as a bot herder, generally has two options for building their botnet. The first is fairly ad hoc, simply registering as many accounts as possible to a program that allows the herder to post via the accounts as if they were logged in. The second approach is to create the botnet via a registered network application: the attacker makes a phony app, links a legion of accounts, and changes the setting to allow the app to post on behalf of the associated accounts. Via the app, the herder then has programmatic access to the full army of profiles. This is essentially how ISIS built their Dawn of Glad Tidings application, which acts as a centralized hub that posts en masse on behalf of all its users.

And then you see a bunch of accounts that are you know not quite as influential but they play a key role in amplifying the message and these are the red nodes that you see a little bit towards the periphery and the reason they're colored red is because they're likely automated. These are accounts that we you know, we have, I'll tell you more later about our machine learning tools to recognize social bots, and those are likely bots that just automatically retweet everything that comes from certain accounts.

Axios - The global business of professional trolling. By Sara Fischer, Apr 13, 2021

Professional political trolling is still a thriving underground industry around the world, despite crackdowns from the biggest tech firms. Why it matters: Coordinated online disinformation efforts offer governments and political actors a fast, cheap way to get under rivals' skin. They also offer a paycheck to people who are eager for work, typically in developing countries. "It's a more sophisticated means of disinformation to weaken your advisories," said Todd Carroll, CISO and VP of Cyber Operations at CybelAngel.

BBC Trending (podcast) - Brazil’s real life trolls - Sun 23 Apr 2023

"Trolls are necessary and I'm going to explain why. We have a troll farm. A lot of them. What we don't use are bots. Bots are different things. you can buy it in India and they give you 10,000 likes in a second. That doesn't work because it's not legitimate. What we do, for example with trolls, is to generate some kind of relevance within the social network's algorithms. They have become very rigid about what they show and what they don't. And that has to do with the relevance of the publication. So what trolls do is give relevance to a certain publication. Good publicity, so that it can be shown more than other publications."

Right here is where things get a little sketchy, as Hack PR decided to look into gaming Reddit to bring some momentum to their campaign. “I knew that if I could get one of my links to the top of Reddit Politics, I would have a pretty good chance of making the idea spread, so I set that as my goal: Get to the top of Reddit Politics within 24 hours.” What it did next was simple. A Hack PR staffer published a link to a Washington Times article about the campaign, who then purchased every single upvote package on Fiverr.com, for a total cost of $35. The post soon blew up and became the most popular article on r/politics. Hack PR also anonymously spammed over 20,000 media contacts with a link to the Reddit post. Each time a publication covered the news, it would repeat the same process with the Washington Times article.

For as little as $299 a month, YourDigitalFace will “create your new digital face which sells,” reads the pitch on the site. They’ll set you up on Instagram and write at least two custom posts a day, as well as handle all the little finesses that lead to a big social media following, like deploying hashtags and liking your followers. You’re guaranteed a minimum of 1,000 new followers a month. The website includes a “portfolio” of satisfied customers, comprised of screenshots from three Instagram accounts that each boast between 50 thousand and 180 thousand followers.

TribalGrowth - 7 Best Marketplaces To Buy & Sell Social Media Accounts (Ranked). by John Gordon

Social Tradia, Instagram. The Toronto-based firm boasts an easy-to-use website that categorizes accounts for sale based on niche and number of followers. One of the best things about this marketplace is that all transactions are carried out over well-established payment portals.

NISOS - Research: Election Manipulation as a Business Model, May 24, 2023

Predictvia is a predictive analytics firm headquartered in Venezuela and Florida. Chief executive officer Ernesto Olivo Valverde and Maria Acedo founded the company in 2013. Predictvia was built on their Seentra platform, which derived from the Tucomoyo “predictive analysis platform” that Olivo Valverde’s prior company Ing3nia developed. (see source 1 in appendix) In 2013, tucomoyo[.]com began redirecting to Ing3nia’s website. Discourse and Election Manipulation Claims Predictvia claims to use artificial intelligence (AI) to manipulate public discourse using fake social media accounts. (see source 2 in appendix) Predictvia claims its platform conducts the following activities: (see source 3 in appendix) - The Seenatra analytics platform identifies “human interests” and other data gathered from social media or directly from users via the DAS intelligent sampling system, recording topic data and generating tags. - Run survey processes to verify which tags have the most activity of interest. - Deployment of campaigns to those social media environments to influence and manipulate public discourse.

What Is a Watering Hole Attack? - Proofpoint.com

A watering hole attack is a targeted attack designed to compromise users within a specific industry or group of users by infecting websites they typically visit and luring them to a malicious site. The end goal is to infect the user’s computer with malware and gain access to the organization’s network. Watering hole attacks, also known as strategic website compromise attacks, are limited in scope as they rely on an element of luck. They do however become more effective, when combined with email prompts to lure users to websites.

Your representative’s office receives your letter and considers your position and interest in the issue as representative of some number of constituents who feel the same as you do, but did not have the inclination to write at present. They often keep tallies in spreadsheets and track issues. So it’s not just your lonely voice, each person who writes really makes a larger difference than you might expect from one person. Pressure on elected government officials with letter campaigns have shaped the laws that govern our lives and protect us from lies and harm, such as car seat belt laws and even the rule that peanut butter has to be made of peanuts and not full of additives!

Transcript:

I'm Chloe Humbert, and I'm not waiting for everybody. And you don't have to either. The Internet of Fakes. Troll Farms, Sock Puppets, and Botnets. A lot of what we see online is not real, and there are reasons for this. There is a whole ecosystem, a whole economy, based around essentially fooling people. People argue, surely it's not deliberate. That it's just regular weird people being foolish, or that it's a few bad actors, or it's a coincidence. Some people, when they hear about this stuff, they demand proof. Once given evidence, they demand more proof. And people still sometimes demand to know, but why? It's very tiring. Even with tons of scientific publications, academic lectures, military documentation, and trade news detailing it all, for some reason it just seems almost unbelievable. But it is absolutely believable. And the reason it works is because people don't realize it's happening. And that's a big part of why it works so well. There was a quote of Rand Waltzman on a podcast with Steve Hassan, the cult expert, and he explained it. Rand Waltzman: “I mean, the main thing to realize is, you know, sort of the key difference between a cognitive attack and a kinetic attack, a physical attack is.” Steve Hassan: “Yeah.” Rand Waltzman: “in a kinetic attack, you know you're being attacked. I mean, if somebody's coming at you with a knife or a gun or hurling bombs at you, I mean, there's no doubt you're under attack, right?” Steve Hassan: “Right.” Rand Waltzman “But in a cognitive attack, The whole point is that the target shouldn't know they're being attacked in order for it to be really effective.” Steve Hassan: “Right.” Rand Waltzman: “So that's the whole trick, to keep the target unaware, because if the target becomes aware that they are being attacked in this way, just by them becoming aware, it significantly reduces the effect of the attack.” Chloe Humbert: So what are botnets? Botnets are automated social accounts so people set up fake social media accounts and then set them up to an automated system and they can direct these accounts to just automatically send out likes and retweets of particular things. So somebody who has this set up can give one command and say this tweet or whatever on Facebook or whatever should you should go in and like this and then all of these automated accounts do that. So it creates an illusion of popularity and gets it into the algorithm so that the algorithm then picks up is like and says oh this is this is popular so it's a way of gaming social media search engine optimization And this is also very well documented. There's an article by the CEO and founder of ZeroFox from 2015. Darkreading.com, the rise of social media botnets. In the social internet, building a legion of interconnected bots, all accessible from a single computer, is quicker and easier than ever before. Quote, cyber criminals use social media botnets to disseminate malicious links, collect intelligence on high-profile targets, and spread influence. As opposed to traditional botnets, each social bot represents an automated social account rather than an infected computer. This means building a legion of interconnected bots is much quicker and easier than ever before, all accessible from a single computer. The person commanding the botnet, also known as a bot herder, generally has two options for building their botnet. The first is fairly ad hoc, simply registering as many counts as possible to a program that allows the herder to post via the accounts as if they were logged in. The second approach is to create a botnet via a registered network application. The attacker makes a phony app, links a legion of accounts, and changes the setting to allow the app to post on behalf of the associated accounts. Via the app, the herder then has programmatic access to a full army of profiles. This is essentially how ISIS built their Dawn of Glad Tidings application, which acts as a centralized hub that posts en masse on behalf of all its users." Unquote. And there was an MIT initiative on the digital economy presentation by Filippo Menzer of Indiana University from December of 2022, and here's a clip from that. Filippo Menzer: “When we look at a network like this, some things pop out. So first of all, we can identify the super spreaders, we can identify those accounts that are playing a key role in amplifying, in generating the message and spreading it, like the big nodes at the center, the size of the node here is the number of retweets. And those are not surprisingly accounts associated with the source itself, like Alex Johnson and InfoWars. And then you see a bunch of accounts that are, you know, not quite as influential but they play a key role in amplifying the message and these are these kind of reddish notes that you see a little bit towards the periphery. And the reason they're colored red is because they're likely automated. These are accounts that we, you know, I'll tell you more later about our machine learning tools to recognize social bots. And those are likely bots that just automatically retweet everything that comes from certain accounts, in this case, Inforce. And a lot of humans, we are shown here, likely humans are shown here in blue, are exposed to these, through these amplification efforts.” Chloe Humbert: People who try to tell you that it's not happening or that that's silly or it's mostly, oh, it's just happenstance. Oh, it's not really happening. No, it's not a joke. It's literally happening. And tons of money is being poured into this. And there's plenty of evidence. A quote from an Axios article by Sarah Fisher from April 2021, The Global Business of Professional Trolling. Quote, Professional political trolling is still a thriving underground industry around the world, despite crackdowns from the biggest tech firms. Why it Matters. Coordinated online disinformation efforts offer governments and political actors a fast, cheap way to get under rivals' skin. They also offer a paycheck to people who are eager for work, typically in developing countries. It's a more sophisticated means of disinformation to weaken your adversaries, said Todd Carroll, unquote. And here's a quote from the BBC Trending podcast titled Brazil's Real Life Trolls from April of 2023. This is a statement from an actual person who runs a business of a troll farm. Fernando Cerimedo: “Trolls are necessary and I'm going to explain why. We have a troll farm, a lot of them, What we don't use are bots. Bots are different things. You can buy it in India and they give you 10,000 likes in a second. That doesn't work because it's not legitimate. What we do, for example with trolls, is to generate some kind of relevance within the social network's algorithms. They have become very rigid about what they show and what they don't. And that has to do with the relevance of that publication. So, what trolls do is give relevance to a certain publication, good publicity, so that it can be shown more than other publications.” Jonathan Griffin: “The trolls on Cerimedo’s troll farm are fake profiles, which he says he uses to boost engagement on social media platforms. This is against most social media platforms' rules. He denies that his troll farm is immoral, because he says it doesn't hurt anyone.” Chloe Humbert: So here's an article from July 2017. AstroTurfing Reddit is the future of political campaigning. Quote, right here is where things get a little sketchy as Hack PR decided to look into gaming Reddit to bring some momentum to their campaign. Quote, I knew that if I could get one of my links to the top of Reddit politics, I would have a pretty good chance of making the idea spread. So I set that as my goal. Get to the top of Reddit Politics within 24 hours. What it did next was simple, a Hack PR staffer published a link to a Washington Times article about the campaign, who then purchased every single upvote package on Fiverr.com for a total cost of $35. The post soon blew up. and became the most popular article on r slash politics. Hack PR also anonymously spammed over 20,000 media contacts with a link to the Reddit post. Each time a publication covered the news, it would repeat the same process with this Washington Times article. Unquote. This is deliberate. This is from 2017. This isn't something new either. This is what is happening. And a total cost of $35. I mean, it might cost more now because of inflation, but this is not expensive even. So if you think about the amount of money that a lot of these big corporations, industries, politicians, super PACs, billionaires, all of this can be bought and just to blow up a post on some social media platform. And a Daily Beast article from February of 2018. The headline reads, Russian troll factory alum selling social media mobs for $299 a month. An email address buried in the latest indictment from Robert Mueller reveals a new service for gaming social networks. Quote, For as little as $2.99 a month, your digital face will create your new digital face, which sells, reads the pitch on the website. They'll set you up on Instagram and write at least two custom posts a day, as well as handle all the little finesses that lead to a big social media following, like deploying hashtags and liking your followers. You're guaranteed a minimum of 1,000 new followers a month. The website includes a portfolio of Satisfied customers comprised of screenshots from three Instagram accounts that each boast between 50,000 and 180,000 followers. Unquote. And then there's an article I found in Tribal Growth, which is some kind of industry website, and the article was titled Seven Best Marketplaces to Buy and Sell Social Media Accounts Ranked by John Gordon. Quote, The Toronto-based firm boasts an easy-to-use website that categorizes accounts for sale based on niche and number of followers. One of the best things about this marketplace is that all transactions are carried over well-established payment portals. So right there, they're telling you this is what happens. Like some rando person could build up a social media account and sell it. If they get enough followers, they have like some kind of niche interest, and they're getting some play, they might even be headhunted and be offered a cash buyout. And then somebody else takes over that account with a particular audience. And then once it racks up a bunch of interest and there's ways to do that of course with botnets and troll farm and buying interactions and then often that explains people are like why did this account suddenly like start pushing misinformation or things that are contrary to what they used to say or there's like these subtle inserts of contrary or contrarian ideas and this is the reason why because the opposition will buy actual accounts that have a following in a certain interest area, and then specifically buy these beloved accounts in order to insert their own propaganda and their own interests into it. And it's a process, and it happens. A lot of people try to say, oh, that's paranoid or whatever. This is the evidence. There are actual companies that that's their whole business. This is not a maybe. Not a maybe. And people do this. That's how they make a living. You know, they find a niche place and then they sell the account or they work for a place that has them set up these fake accounts and build them up so that people can buy them for their propaganda, their advertising needs. So then a paper from May 24th, 2023, Research election manipulation as a business model. Quote, Discourse and Election Manipulation Claims, Predictivia, claims to use artificial intelligence, AI, to manipulate public discourse using fake social media accounts. Unquote. This is a business model. It's not a maybe. This is actually happening. This is what happens on all of social media. And there's no place that it hasn't gone because they go where the people are. It's a watering hole issue. This is a known strategy in cybersecurity and con artistry. You go where the where the people are. Prey congregates, you go there. The watering hole. All of this could serve to make things look more important than it is. And it's inauthentic. And politicians know this. Heads of state know this. Important people know this. And you can get 10,000 likes on a tweet, but it's not worth as much as lobbying an elected representative. So it doesn't really matter how social media, if you get the, you could have a botnet boost your stuff, but if you're not writing your reps, that's what the opposition is doing. They're sending lobbyists and they're writing to your representatives on a regular basis. And that opposition wants to be the only one lobbying your representative. They want you focused on hot takes and sick burns on social media. Don't let anybody silence you. You have a right to speak up too in the ways that it will help bring us good things in our civilization. Write your reps as yourself, as a constituent, so they know what their constituents want.

Share this post